Table of contents:

Request a Demo

Discover the key differences between data orchestration and ETL, and learn how modern ETL platforms like DataLark bridge the two approaches.

Data Orchestration vs. ETL: The Complete 2025 Guide to Data Pipeline Automation

Data doesn’t live in one place anymore — it flows between SAP S/4HANA, Salesforce, Snowflake, and countless other systems. Keeping that flow seamless, accurate, and real-time is no small feat.

For decades, ETL (Extract, Transform, Load) powered data integration. But in today’s hybrid, cloud-driven world, ETL alone can’t handle the complexity of modern pipelines.

That’s where data orchestration steps in — not to replace ETL, but to make it smarter. It automates, monitors, and coordinates data across every layer of your ecosystem, connecting SAP and non-SAP systems into one unified flow.

In this guide, we’ll clarify the difference between data orchestration and ETL, explain how they work together, and show how platforms like DataLark help enterprises turn data movement into data intelligence.

The New Reality of Data: Why Orchestration Is On the Rise

In 2025, data architecture looks nothing like it did a decade ago. Enterprises now manage vast ecosystems of systems — SAP S/4HANA, SAP BW/4HANA, Salesforce, Snowflake, AWS, Databricks — all generating and consuming data at high velocity. The challenge is no longer just moving that data; it’s about synchronizing, governing, and activating it across platforms in real time.

For years, ETL (Extract, Transform, Load) was the workhorse behind data integration. It extracted information from systems like SAP, transformed it to fit a common schema, and loaded it into centralized repositories for analysis. And for many organizations, that approach worked — as long as data lived in a controlled, mostly on-premise world.

But today’s reality is far more dynamic. Data flows continuously between ERP systems, APIs, streaming platforms, and machine learning models. Batch-driven ETL pipelines, while still essential, often can’t keep pace with the real-time data processing demands of business operations or analytics. When an SAP inventory update needs to trigger an instant refresh in a cloud dashboard, waiting for an overnight ETL job just doesn’t cut it.

That’s why data orchestration has become the backbone of the modern data stack. Instead of focusing on transformation alone, orchestration manages how and when data processes run — across every system, environment, and tool. It ensures SAP data syncs with cloud warehouses, APIs, and analytics in perfect order, turning fragmented data tasks into cohesive, intelligent workflows.

The numbers tell a compelling story: the global data orchestration market is projected to reach $4.3 billion by 2034, growing at a compound annual growth rate (CAGR) of 12.1%.

As we move further into 2025, the distinction between data orchestration vs ETL isn’t just technical — it’s strategic. Companies that master orchestration gain the agility to adapt, automate, and innovate faster than ever before.

What Is ETL?

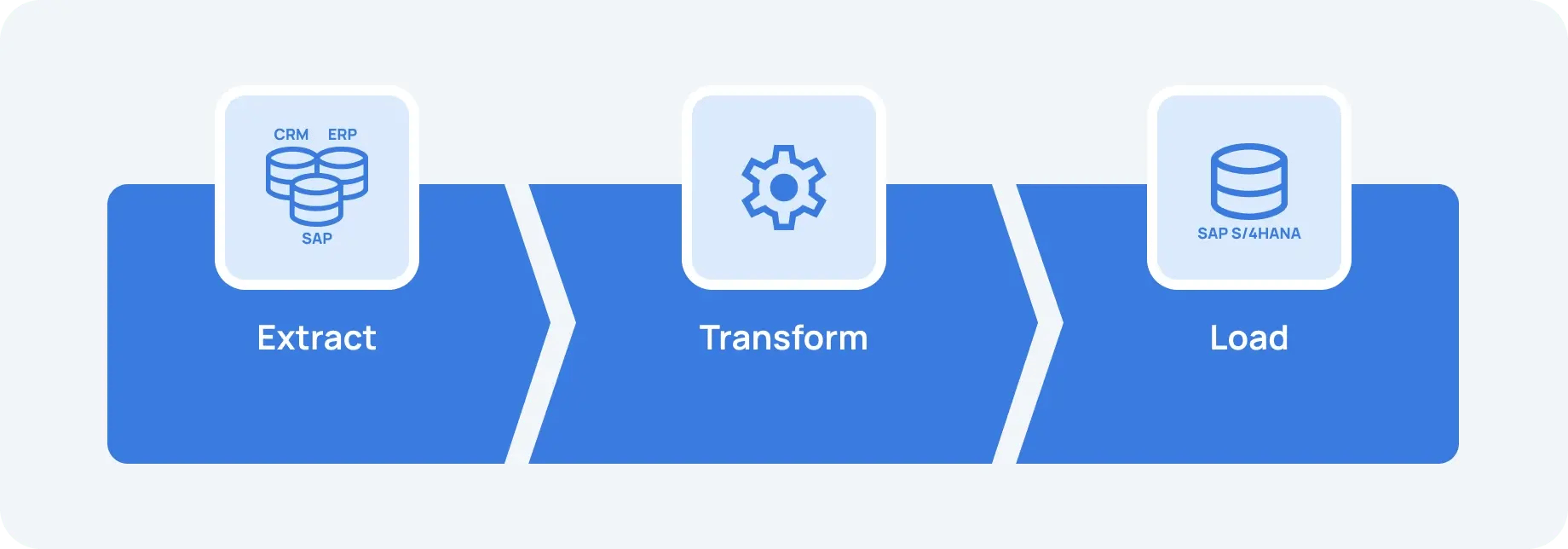

Before we explore how orchestration changes the game, it’s worth revisiting the foundation: ETL — short for Extract, Transform, Load.

At its core, ETL is a process designed to consolidate data from multiple systems into a single, consistent format for analysis. It’s been the bedrock of enterprise data management for decades — particularly in SAP-centered landscapes, where clean, governed data is non-negotiable.

Here’s how it works:

- Extract: Data is pulled from source systems (e.g. SAP S/4HANA, SAP ECC) or external CRMs and eCommerce platforms.

- Transform: That data is standardized, enriched, or cleansed. For example, sales orders from SAP might be converted into a common currency or mapped to a unified product hierarchy.

- Load: The transformed data is then pushed into a data warehouse or lake, like Snowflake, Google BigQuery, or SAP BW/4HANA, for analytics and reporting.

This approach has powered countless business intelligence systems, from SAP BW dashboards to modern self-service analytics. ETL ensures consistency, compliance, and data quality — three pillars that keep operations and decision-making stable.

However, the traditional ETL model was built for batch processing — running nightly or hourly jobs. That made sense when data moved slowly, but not in today’s real-time data processing, API-driven world.

When a sales transaction happens in SAP, a customer logs a support ticket in Zendesk, and a predictive model in Databricks must respond instantly — ETL alone can’t orchestrate those events. It still plays a vital role, but it operates as one component of a much larger data ecosystem.

That’s why understanding data orchestration vs. ETL is so important: orchestration doesn’t replace ETL — it makes it responsive, connected, and aware of the bigger picture.

What Is Data Orchestration?

If ETL is the engine that moves and shapes data, then data orchestration is the control tower that ensures every process runs at the right time, in the right order, and for the right reason.

In simple terms, data orchestration is the coordination and automation of all data workflows — ETL jobs, API syncs, analytics updates, and machine learning pipelines — across your entire data landscape. It’s about managing how data flows, not just where it goes.

Think of a global company running SAP S/4HANA for finance, Salesforce for CRM, and Snowflake for analytics. Each system has its own rhythm and rules.

ETL can extract and transform data from SAP to Snowflake, but orchestration determines:

- When the ETL job should start.

- What happens if SAP data is delayed or incomplete.

- Whether a Power BI dashboard should refresh automatically when a new batch lands.

- How multiple ETL pipelines run in sequence — or in parallel — without conflicts.

That’s where orchestration tools like DataLark come in. They don’t just move data; they govern the flow. They monitor dependencies, manage retries, alert teams when something fails, and trigger downstream processes — all automatically.

In an SAP context, orchestration can:

- Trigger ETL jobs immediately after a data load in SAP BW/4HANA.

- Automate SAP-to-Snowflake synchronization without manual scheduling.

- Coordinate complex workflows where SAP, Azure Data Factory, and Databricks each play a role.

- Ensure real-time integration between SAP and non-SAP systems for unified analytics or AI models.

The key distinction in the data orchestration vs ETL conversation is:

- ETL focuses on data transformation.

- Orchestration focuses on process transformation — automating how data operations interact across an entire ecosystem.

In other words, ETL moves data through a pipeline. Orchestration ensures the entire data supply chain runs like clockwork.

Data Orchestration vs ETL: Key Differences

Now that we’ve defined both concepts, let’s look at where data orchestration and ETL truly diverge — and how they work together to power modern data ecosystems.

At a glance, ETL is a process for preparing and loading data. Data orchestration is a framework for managing how all those processes connect, depend on, and trigger each other.

The distinction might sound subtle, but in practice it’s transformational — especially in SAP-driven enterprises, where workflows span ERP systems, cloud platforms, and analytics environments.

Here’s a side-by-side view:

|

Aspect |

ETL (Extract, Transform, Load) |

Data Orchestration |

|

Primary Goal |

Move and transform data |

Coordinate and automate data workflows |

|

Scope |

Individual pipeline or dataset |

Entire data ecosystem (multiple pipelines, systems, and tools) |

|

Processing Mode |

Batch (scheduled jobs) |

Real-time and event-driven, with dependency control |

|

Control Logic |

Limited – defined within each ETL job |

Centralized – defines the logic, order, and conditions of many jobs |

|

Tools |

SAP Data Services, Fivetran, Talend, Informatica, DataLark |

Apache Airflow, Prefect, Dagster, DataLark |

|

Observability |

Basic logs |

Full visibility into workflow dependencies, success/failure states, and timing |

|

Flexibility |

Fixed workflows |

Dynamic and adaptive workflows |

|

Typical Use Case |

Load SAP data into Snowflake or SAP BW |

Automate end-to-end flows: SAP → ETL → Snowflake → Power BI or ML model |

An example of how this plays out in practice: a manufacturing company uses SAP S/4HANA to track production orders. ETL jobs extract and transform that data into Snowflake each night for reporting. With data orchestration, the process evolves — when an SAP order closes, DataLark triggers an immediate ETL pipeline, validates data quality, updates Snowflake in real time, and refreshes dashboards automatically. No delays, no manual scheduling, no stale insights.

That’s the true power of orchestration: it turns ETL into part of a living, responsive data ecosystem. Rather than focusing on a single data flow, orchestration sees the big picture — ensuring that SAP, APIs, cloud warehouses, and AI models stay in sync with minimal human intervention.

Do You Need Both ETL and Orchestration?

Yes — and they’re stronger together.

While ETL remains the foundation of any reliable data pipeline automation, orchestration provides the structure that keeps those pipelines aligned, efficient, and responsive. Most modern data teams rely on a combination of both: ETL to move and prepare data, and orchestration to coordinate and monitor the flow of those processes.

In practice, ETL still does the heavy lifting. It’s what extracts data from SAP S/4HANA, transforms it into a consistent model, and loads it into a target system such as Snowflake or SAP BW/4HANA. Without strong ETL logic, no orchestration layer can function effectively.

What’s changing today is the expectation that ETL alone should be smarter — more automated, event-aware, and integrated across systems.

Modern ETL platforms, including DataLark, now offer features that help close this gap with:

- Automated job scheduling to eliminate manual triggers.

- Dependency management to ensure one process finishes before another begins.

- Workflow visibility for monitoring data pipelines end-to-end.

For example, a DataLark pipeline might extract data from SAP S/4HANA, transform it for analytics, and then automatically trigger a load into Snowflake once the SAP data refresh completes — all within a single coordinated process.

This level of automation doesn’t replace full-scale orchestration tools like Airflow or Prefect, but it gives teams a simpler, integrated way to run reliable, connected ETL pipelines without adding operational overhead.

So, while ETL and orchestration remain distinct disciplines, the line between them is becoming more fluid. Tools like DataLark make it easier for teams to bridge the two — keeping ETL at the core, but adding the intelligence and automation that modern data environments demand.

The Future of ETL: Smarter, More Connected, More Context-Aware

The next wave of data innovation isn’t about replacing ETL — it’s about making it intelligent and context-aware. As architectures grow more distributed, companies will expect ETL tools to behave less like static pipelines and more like adaptive systems that can learn, monitor, and optimize themselves.

Three big trends are already shaping this future:

- Event-driven data movement: Instead of relying on rigid schedules, future ETL processes will respond to real-time business events — like a new sales order in SAP S/4HANA or a stock update in an E-commerce platform. Triggers will replace timers and keep analytics continuously fresh without unnecessary runs.

- Integrated data observability: Visibility will become just as important as transformation. Teams will expect their ETL tools to surface anomalies, schema changes, and latency issues automatically — closing the feedback loop between data engineering and business operations.

- AI-assisted optimization: Machine learning will start fine-tuning ETL pipelines on its own: predicting bottlenecks, dynamically allocating compute resources, and recommending transformation logic based on historical performance and usage patterns.

DataLark is evolving along this path — focusing on automation, transparency, and adaptability within its ETL foundation. Rather than trying to replace full orchestration platforms, DataLark aims to make ETL smarter: easier to manage, quicker to respond, and more aware of its place in a larger data ecosystem.

In that sense, the future isn’t about ETL versus orchestration. It’s about building a connected data infrastructure where transformation, automation, and intelligence work together — turning the movement of data into a continuously optimized process.

Case Study: Streamlining SAP-to-Snowflake Data Pipelines with DataLark

To see how these concepts play out in practice, let’s look at a real-world example of how a global manufacturing company used DataLark to modernize its SAP data pipelines — achieving orchestration-level automation while keeping ETL at the core.

Challenge

The company relied heavily on SAP S/4HANA to manage production, logistics, and financials. Every night, a series of ETL jobs extracted SAP data, transformed it, and loaded it into Snowflake for reporting.

However, the process had several limitations:

- Jobs ran on fixed schedules, regardless of when SAP data was updated.

- Failures in one ETL pipeline sometimes delayed others, with no automatic recovery.

- Analysts couldn’t always trust data freshness in dashboards.

The data engineering team wanted a more responsive and reliable approach — one that didn’t require adopting a full orchestration platform, but could deliver similar coordination.

DataLark Solution

The team implemented DataLark as their central ETL platform, configuring pipelines to handle data extraction from SAP S/4HANA tables via OData APIs and transformation logic before loading to Snowflake.

To make the process more dynamic, they used DataLark’s automation and dependency management features to:

- Trigger jobs based on SAP data updates: When new production orders were posted in SAP, a webhook initiated the corresponding ETL pipeline in DataLark automatically — no manual scheduling required.

- Chain dependent pipelines: Downstream jobs, such as currency normalization or product hierarchy enrichment, only began once the initial extraction completed successfully.

- Monitor and alert in real time: DataLark’s built-in monitoring dashboard provided visibility across all active jobs, notifying the team immediately if a transformation step failed or lagged.

- Feed insights directly to BI: Once Snowflake loads were complete, a trigger launched a Power BI dataset refresh, ensuring dashboards reflected the latest SAP data within minutes.

Outcome

Within weeks, the company achieved:

- 40% faster data availability in analytics dashboards.

- Reduced manual oversight, as job dependencies handled themselves.

- Higher trust in SAP data, thanks to consistent and observable pipelines.

Most importantly, the team didn’t need to introduce a complex orchestration platform — they achieved the right balance between automation and simplicity by extending their ETL workflows intelligently within DataLark.

Conclusion

The solution to the ETL vs. orchestration dilemma isn’t about replacing one technology with another, it’s about raising the level of intelligence in how data moves across an organization.

Enterprises running SAP, cloud, and AI systems side by side can’t afford disjointed data processes. They need automation, visibility, and adaptability — but they also need the reliability and structure that ETL provides.

That’s where platforms like DataLark play a critical role: empowering data teams to go beyond traditional ETL, without the complexity of full orchestration frameworks. By automating dependencies, improving monitoring, and connecting workflows across systems, DataLark helps organizations build pipelines that are faster, smarter, and more resilient — all while staying grounded in the proven principles of ETL.

The takeaway is simple: ETL remains the backbone of enterprise data. But as data environments grow more interconnected, tools that combine ETL strength with orchestration agility will define the next generation of data excellence. Request a demo of DataLark and check it out yourself.

FAQ

-

What’s the main difference between data orchestration and ETL?

The key difference lies in their scope and purpose. ETL (Extract, Transform, Load) focuses on moving and preparing data – extracting it from sources such as SAP S/4HANA – transforming it into a usable format, and loading it into a target system like Snowflake or SAP BW/4HANA.

Data orchestration, on the other hand, manages the coordination of multiple data processes. It ensures that various ETL jobs, API syncs, and analytics updates run in the right order, with proper dependencies and error handling.

In short: ETL is about data transformation, while orchestration is about workflow automation across the entire data ecosystem.

-

Do modern ETL tools include orchestration features?

Yes — many modern ETL platforms, including DataLark, have begun incorporating orchestration-like automation features. These include:

- Job scheduling and chaining, so processes run automatically after dependencies complete.

- Event-based triggers, enabling pipelines to start when new SAP data or files become available.

- Workflow visibility and alerts, providing real-time monitoring for data teams.

While these capabilities don’t replace full-scale orchestration tools, such as Airflow or Prefect, they make ETL pipelines smarter, more connected, and less dependent on manual oversight.

-

Can I replace my ETL tool with a data orchestration platform?

Not exactly. ETL tools and orchestration platforms serve complementary purposes. ETL handles data preparation — extracting, transforming, and loading data with strong validation and governance controls. Orchestration platforms handle workflow management, automating the execution of multiple ETL and non-ETL tasks.

For most organizations, the best approach is a hybrid model: keep your ETL tool as the transformation backbone, while adding orchestration for broader process control if needed.

-

How does DataLark help bridge ETL and orchestration?

DataLark is designed as a modern ETL platform that simplifies and automates complex data workflows. It helps bridge the ETL–orchestration gap by:

- Allowing teams to chain dependent ETL jobs so they run sequentially or in parallel.

- Supporting event-based triggers that start pipelines when data in SAP or other systems changes.

- Offering centralized monitoring for visibility and quick troubleshooting.

This gives organizations orchestration-level control over their ETL pipelines — without introducing the complexity of a separate orchestration system.

-

How does this apply to SAP-driven environments?

In SAP-centric data landscapes, orchestration and ETL play a vital role in connecting operational data with analytics and cloud platforms. For instance, DataLark can:

- Extract data from SAP S/4HANA using OData APIs or CDS views.

- Transform and validate the data according to business logic.

- Automatically load it into a warehouse like Snowflake or Azure Synapse.

- Trigger downstream refreshes in BI tools such as Power BI or Tableau.

This ensures that SAP data stays accurate, timely, and aligned with business processes across systems.

-

Is data orchestration replacing ETL?

No — it’s evolving around ETL.

ETL remains the foundation of structured data management, especially for compliance-heavy and SAP-integrated environments. What’s changing is how ETL operates within larger ecosystems. Orchestration adds intelligence, adaptability, and visibility, allowing ETL pipelines to respond dynamically to events rather than running on fixed schedules.

In essence, orchestration doesn’t replace ETL — it makes it smarter and more connected.

-

What’s the future of ETL and data orchestration?

The future lies in convergence. ETL tools will increasingly include automation, event handling, and observability features that once belonged exclusively to orchestration platforms. Meanwhile, orchestration tools will become more data-aware, integrating directly with transformation logic.

As this convergence accelerates, tools like DataLark will play a key role — enabling teams to manage both transformation and coordination from a single, intuitive platform that connects SAP, cloud, and analytics environments.